GenAI and LLM Product Paradigms

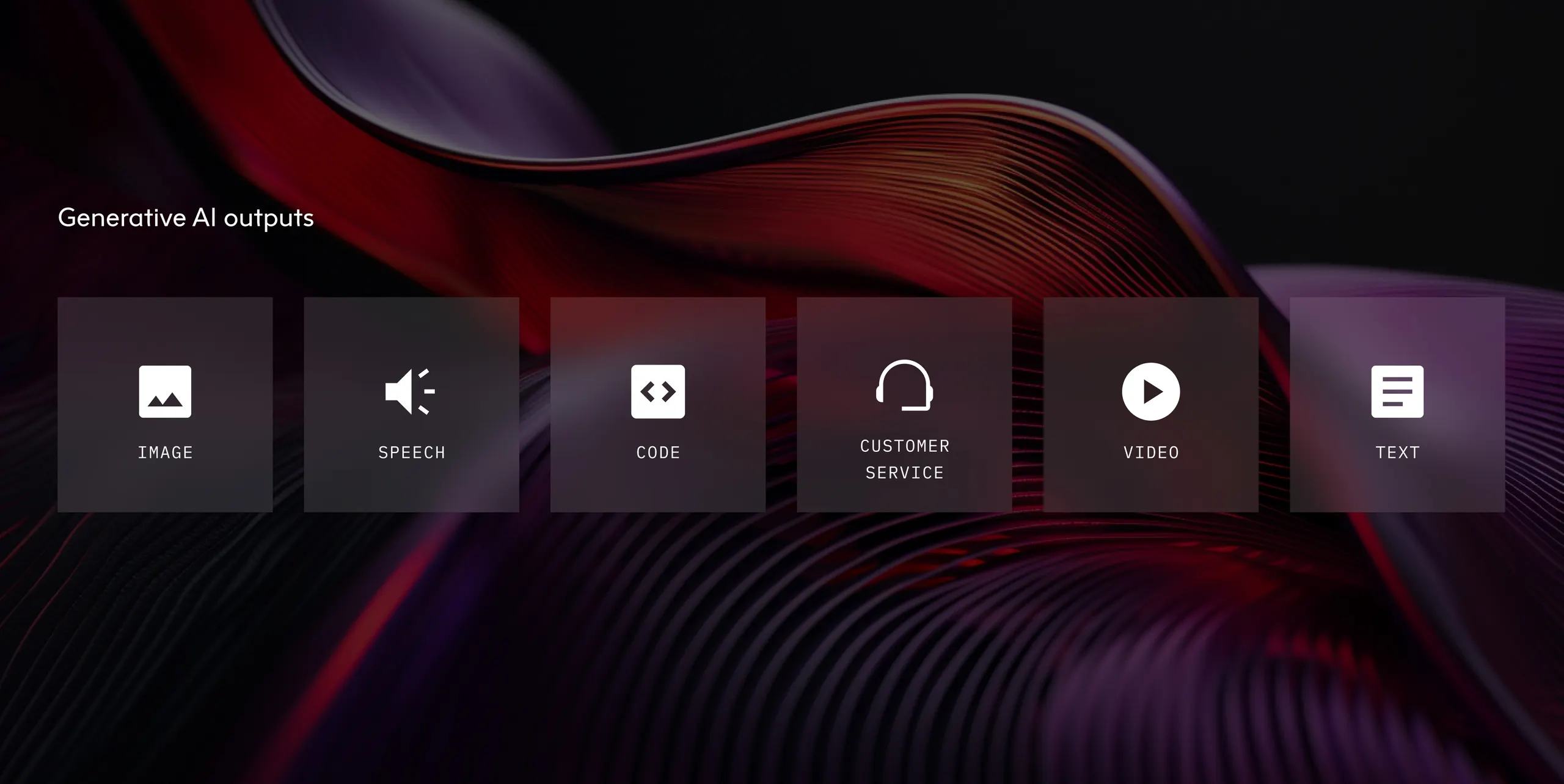

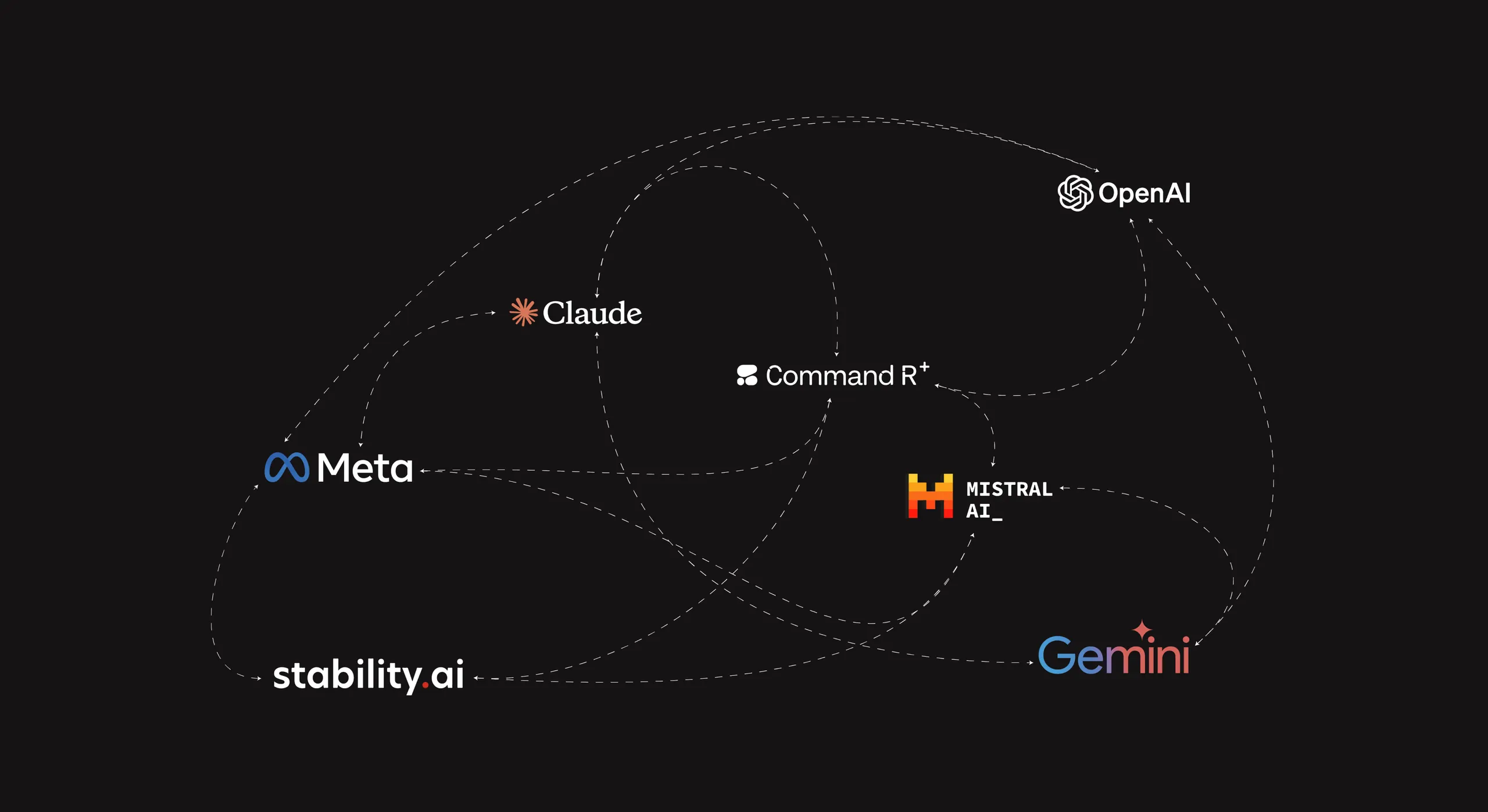

As mentioned above, by utilising their natural language fluency, reasoning ability and emerging orchestration techniques, GenAI and LLMs represent an opportunity for organisations to build and develop leading-edge enterprise applications and systems. As these organisations begin experimenting with and adopting these technologies, new and innovative use cases of GenAI and LLMs are emerging. At Elsewhen, we currently see the dominant and popular use cases falling under four GenAI and LLM product paradigms.

Our previous underwriting workflow automation could be packaged up alongside a pipeline of LLM-based agents that request more information from the applicant, write and send an offer letter or even request and apply for a reinsurance policy.

1. Conversational Interfaces

Conversational interfaces, also known as Natural Language Interfaces (NLI), are arguably the most simple and common LLM product paradigm currently being used in enterprises. At its core, these products connect an LLM to a knowledge base, such as a database, CMS, HR documentation or financial data, to automate question answering. This is displayed and interacted with using a conversational interface such as chatbots, virtual assistants or customer service bots, itself powered by an LLM.

Importantly, previous attempts at conversational AI were limited in scope, inefficient and often left users frustrated. Just think about all those useless chatbots you have used over the years! However, by leaning into natural language fluency, these LLM-driven conversational interfaces support more human, natural and unique conversation and, in turn, display higher levels of user satisfaction. Furthermore, by connecting directly to in-house data, conversational interfaces can deliver more accurate, up-to-date and personalised interactions in health AI applications with users and customers.

At Elsewhen, we see information or document retrieval as a particularly convincing use case for conversational interfaces. A study found that employees spend more than 20% of their work week searching for information internally. Here, interfaces can minimise this time, connecting employees seamlessly with the right information in real-time. For example, Walmart uses a number of internal conversational interfaces. Ask Sam, designed for in-store employees, enables users to locate items, access store maps, look up prices, view sales information, and check messages. And My Assistant, designed for the 50,000 non-store employees, augments new hires onboarding; offering self-serve information on the companies employee policies and benefits.

2. Workflow Automation

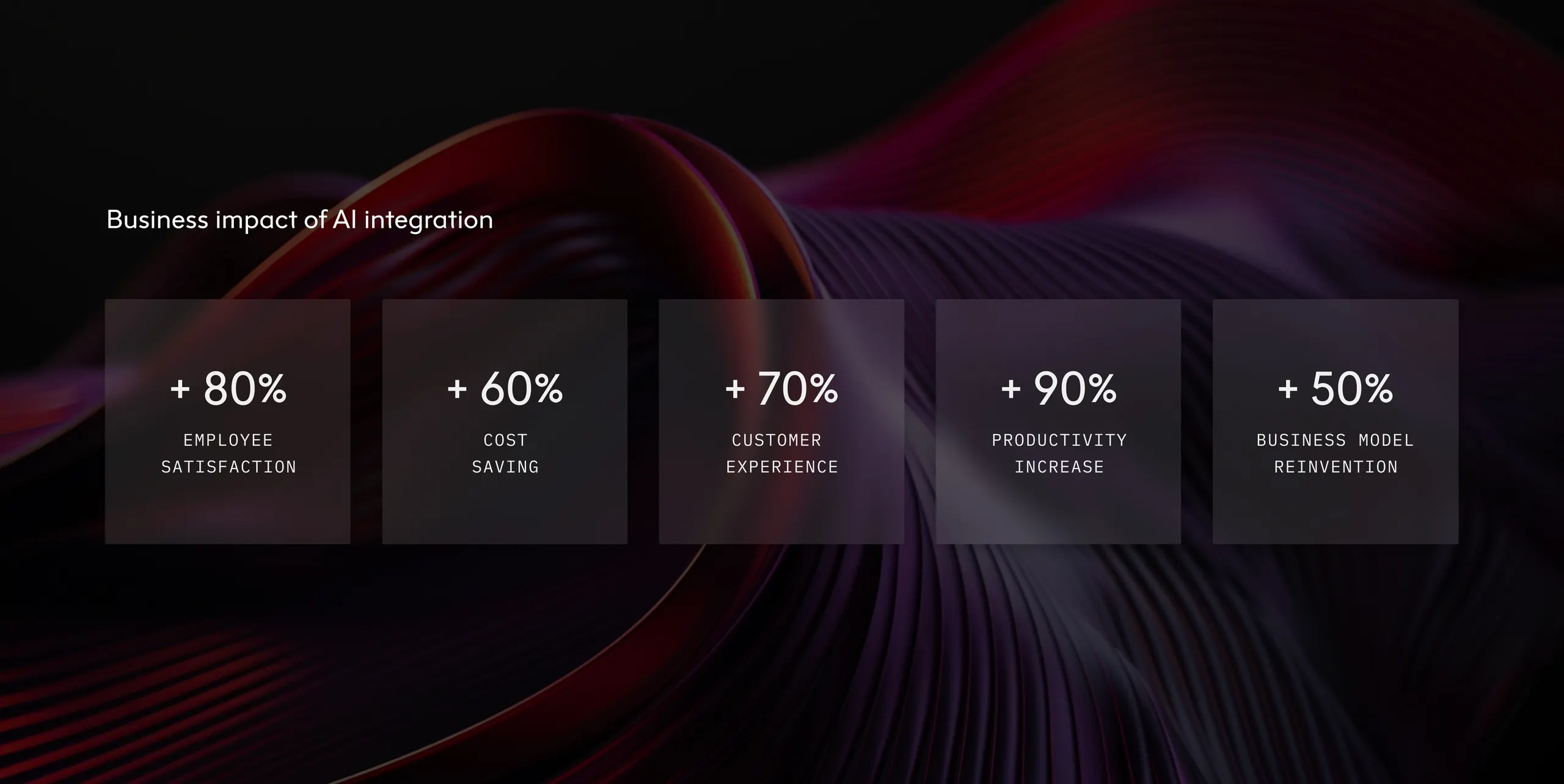

Enterprise organisations are full of a variety of business processes ranging in complexity, frequency and, importantly, the amount of manual labour required. GenAI and LLMs present the opportunity to automate many of these distinct, predefined workflows incorporating artificial intelligence technologies in business and using access to data and tools. By focusing on the unique reasoning capabilities and access to proprietary data, LLMs can turn a business process into a behind-the-scenes pipeline of transformations, interactions and decisions.

The value of these workflow automations is quite straightforward: employee hours saved and outcomes accelerated. However, to unlock the most business value, these types of automations should target and streamline specific processes that harm an employees productivity; designed with a human in the loop, rather than replacing them. Here, a lot of work must be done to identify those manual processes that are most suitable for automation using LLMs.

At Elsewhen, we have been exploring how GenAI and LLMs could be used to automate workflows across underwriting. In particular, medical underwriting involves manually assessing a number of different data sources to determine an appropriate premium for an insurance policy, such as doctors notes, application forms, claims history, underwriting manuals, and other documents. With LLM workflow automation, we can feed these inputs into a model, automatically synthesise the information and output a recommendation to the underwriter. By leveraging a number of different data sources, this type of workflow automation not only improves underwriting efficiency but also creates more accurate, dynamic models of risk; deepening the understanding of risk to create better priced and more customised policies.

3. Co-Pilots

Copilots are a new product paradigm to augment workflows, increase the efficiency of users and dramatically redesign how they do their jobs. Whilst the term is increasingly synonymous with Microsoft's Co-Pilot, co-piloting is simply the collaboration between a human and an AI model to achieve a goal or complete a task. Here, the AI model provides suggestions, information, or even completes parts of the task based on the user's input. This can include drafting emails, writing code, or assisting with problem-solving.

Another good example is GitHub Copilot, which helps developers by explaining a piece of code, or fixing an error, in the real-time context of any given project. In effect the underlying LLM is hidden behind a newly designed developer environment. Users can “spend less time searching and more time learning” with on-the-go troubleshooting. The end result being developer productivity increases by 55.8%.

However, there is potential to go a lot further – creating specialised AI-powered tooling that provides tailored collaboration integrated into enterprise tools, data and architecture. Enterprise-grade copilot tools could provide timely and contextually relevant access to data and domain knowledge that would otherwise take days to integrate into the job at hand. In turn, team members gain time and cognitive load to navigate higher abstractions of problems and iterate solutions in real-time. This helps to shape a flow-like employee experience, while ensuring they control the final outcome.

4. Autonomous Agents

The most advanced product paradigm we see with GenAI and LLMs are autonomous AI agents. Autonomous agents, by integrating knowledge, data, tools and a reasoning system, use LLMs to essentially replace the user. These AI agents are a move away from the “text in, text out” and “human in the loop” paradigms above towards a more “fire and forget” approach whereby LLMs sequence and string together end-to-end tasks with almost human-like ability.

AI agents, leveraging Retrieval Augmented Generation and Neural Information Retrieval, use traditional software interfaces, or reasoning agents, to plan and direct multiple LLMs toward set goals. Recursive agents generate and execute tasks systematically, leveraging external tools or databases such as web browsing, external memory or enterprise data to optimise performance. Significantly, within the chain of tasks, the AI agent reacts to and aligns its next action based on the output generated previously. This approach could allow autonomous agents to tackle intricate challenges independently.

Autonomous agents could pave the way for transformative LLM applications across diverse sectors and functions. In returning back to underwriting, AI agents might represent another opportunity to streamline processes. Here, our previous underwriting workflow automation could be packaged up alongside a pipeline of LLM-based agents that request more information from the applicant, write and send an offer letter or even request and apply for a reinsurance policy.